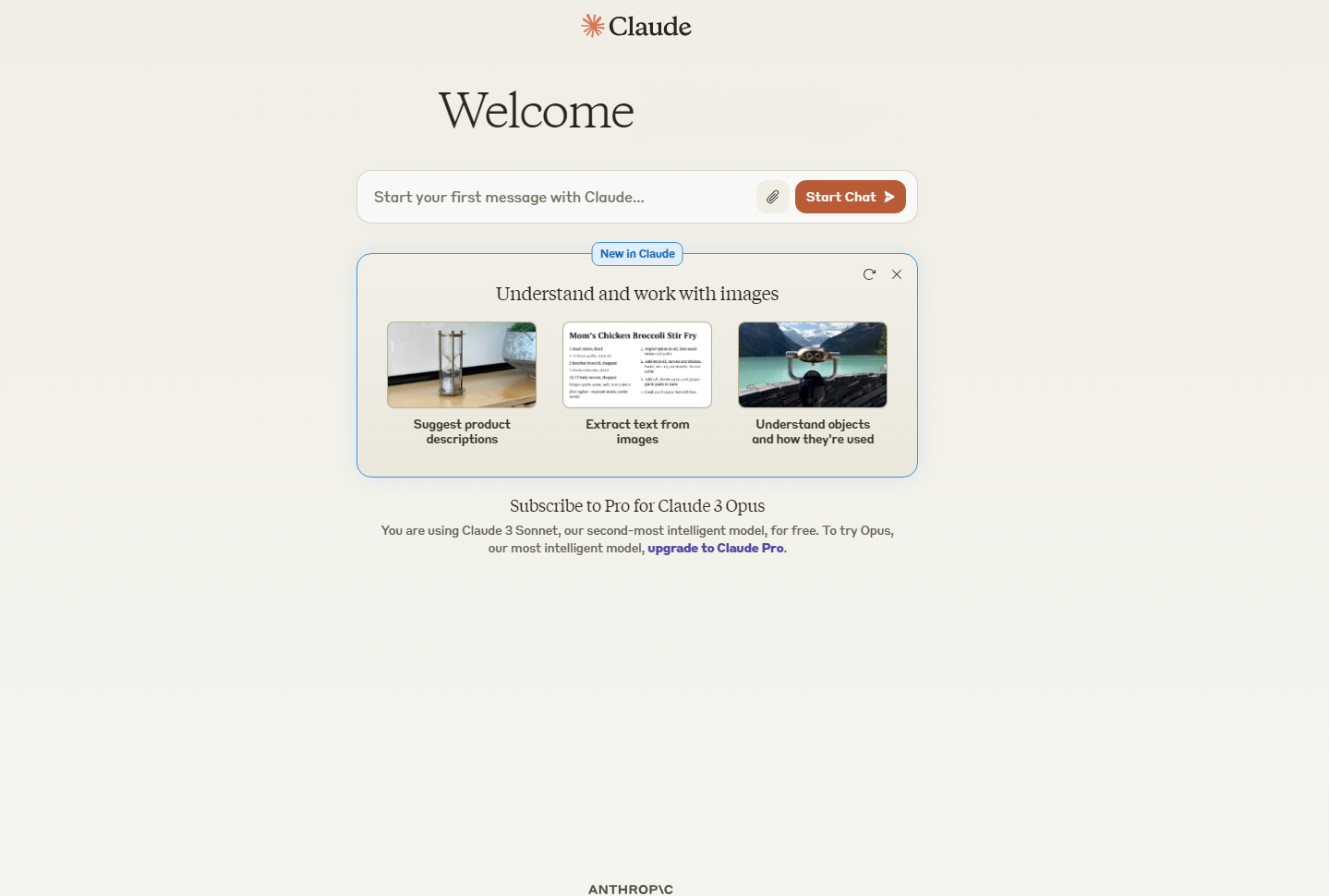

Bard, rebranding itself as Gemini, was recently the talk of the AI town! Following this, Claude also released its new version recently. Both have major changes and new additions which put me into a question of Claude vs Gemini —Which one is worth the time and money?

I have been interested in the AI world since 2020, and like many others, I used it to help me out with research. However, due to the advanced technologies, the new models stirred my curiosity. I wanted to know how far I could bend the tool’s capabilities.

After exploring countless articles regarding Claude and Gemini, I tested the performance of both tools. To do so, I ran common prompts for:-

- General Reasoning and Comprehension

- Mathematical Reasoning

- Code Generation

So, let’s dive and see which one shines out in the detailed comparison!

Claude vs Gemini 2024: Quick Comparison for Insights

Check out the following table to understand the key differences between Claude and Gemini:-

| Model Name | Claude AI | Gemini AI |

| Parent Company | Anthropic | Google DeepMind |

| Model Family | Jurassic-1 Jumbo | Language Model For Dialogue Applications |

| Source | Open-ended | Closed-ended |

| Focus | Researched-based and Factual information | Creative and Exploration information |

| Number of Users | 19.8 Million | 2 Million |

| Available Regions | UK & USA | Over 170 countries |

| Technology | Constitutional AI | Pro 1.0 and Ultra 1.0 |

| Token Limit | 200K | 2048 Tokens |

| Price | $20/ Month | $19.99/ Month |

| Code Generation | Provide Detailed Codes | Provides great code examples of Python, Java, C++, Go, and more |

| General Reasoning | Lacks General Intelligence | Specially designed to solve complex reasoning query |

| Mathematical Reasoning | Basic Math capabilities | Lacks complex problem-solving skills |

Claude vs Gemini – 2024 Overview

I have compared both the tools below, but before we dive into the details, let’s take a quick look at both the tools to understand what they offer and the recently launched features.

As of March 2024, Claude and Gemini are also geared up for more powerful features and abilities. Both show prominent benchmark figures. But since both aren’t publicly available currently, that comparison has to wait.

What is Claude AI?

Claude AI is designed explicitly as a conversational tool that understands a wide range of languages and task-generation prompts.

Also, you do not need to worry about privacy issues, as the tool prioritizes your safety.

What’s New With Claude AI?

Claude recently launched a family of AI language models under Claude 3 in the form of – Haiku, Sonnet, and Opus, each priced differently.

Out of these, Opus and Sonnet are the only ones currently available across 159 countries. Follow this official Claude 3 announcement to check its performance metrics.

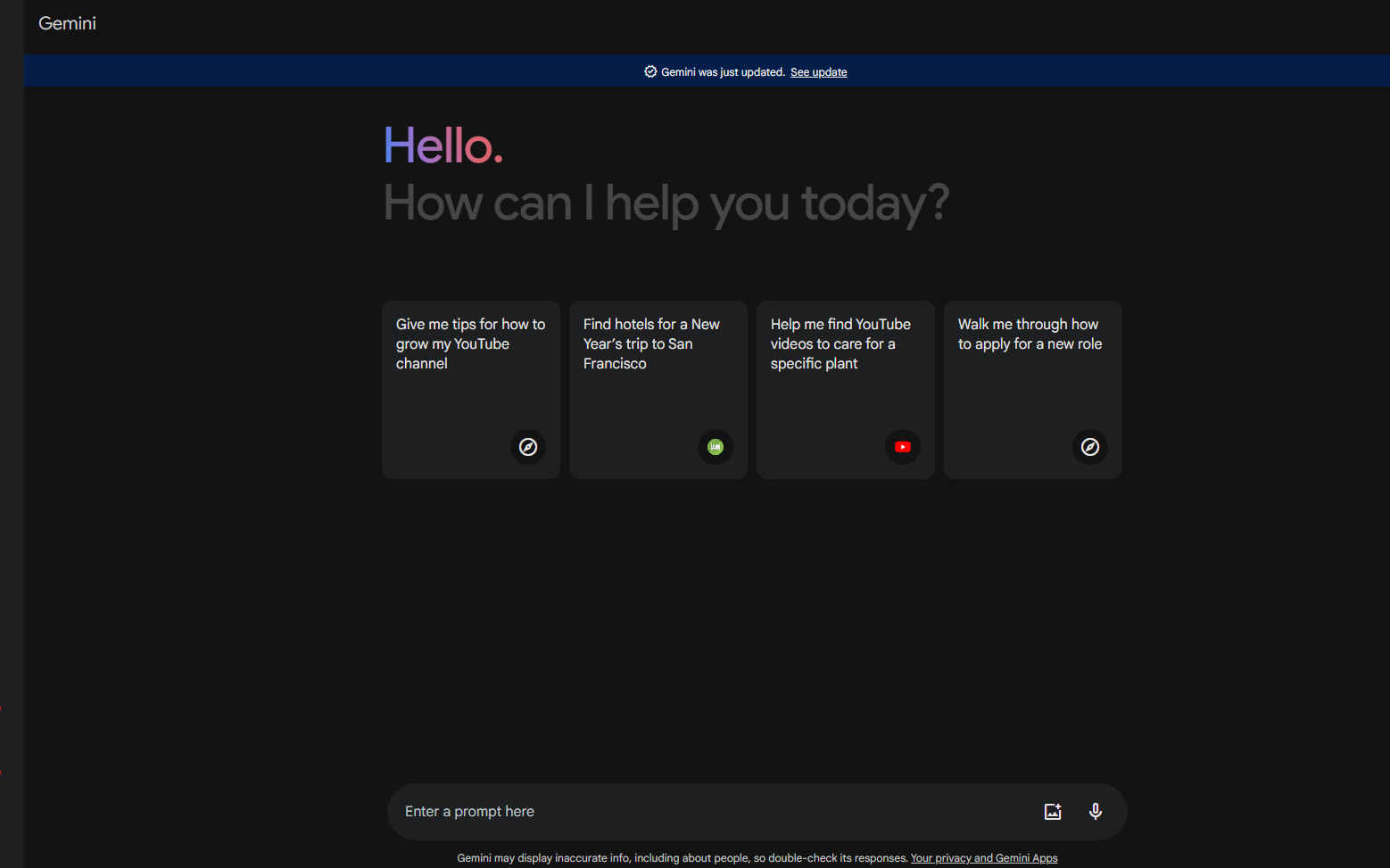

What is Gemini AI?

Gemini is a multimodal tool capable of processing a wide range of data types, including handling texts, images, audio, videos, and so on. Gemini Ultra, Pro, and Nano are some of the Google Bard/ Gemini family members.

These tools have specific applications with the following tasks:

- Mobile apps

- Cloud-based tasks

What’s New With Gemini?

The Google team is already working on the next generation of Gemini in the form of Ultra 1.5, which has shown truly promising results, outranking other leading language models.

For example, it can operate up to 1M tokens and has higher context retention. Read this official blog on Ultra 1.5 to know more.

Performance Capabilities – Claude AI & Google Gemini

To compare Claude vs Gemini briefly, I have only added the three parameters that have given the comparative Gemini Statistics with Claude. So, let’s go through them below! (Source: Bito.ai)

| Performance | Claude | Gemini |

|---|---|---|

| General Reasoning and Comprehension – MMLU | 78.5% | 90% |

| Mathematical Reasoning – GSM8K | 88% | 94.4% |

| Code Generation – HumanEval | 71.2% | 74.4% |

Now that we have gone through the statistics of Claude and Gemini, let’s test its performance.

Note: The following three tests are my own, as I have run the specific prompts on both AI tools as per the General Reasoning and Comprehension, Mathematical Reasoning, and Code Generation.

1. General Reasoning and Comprehension

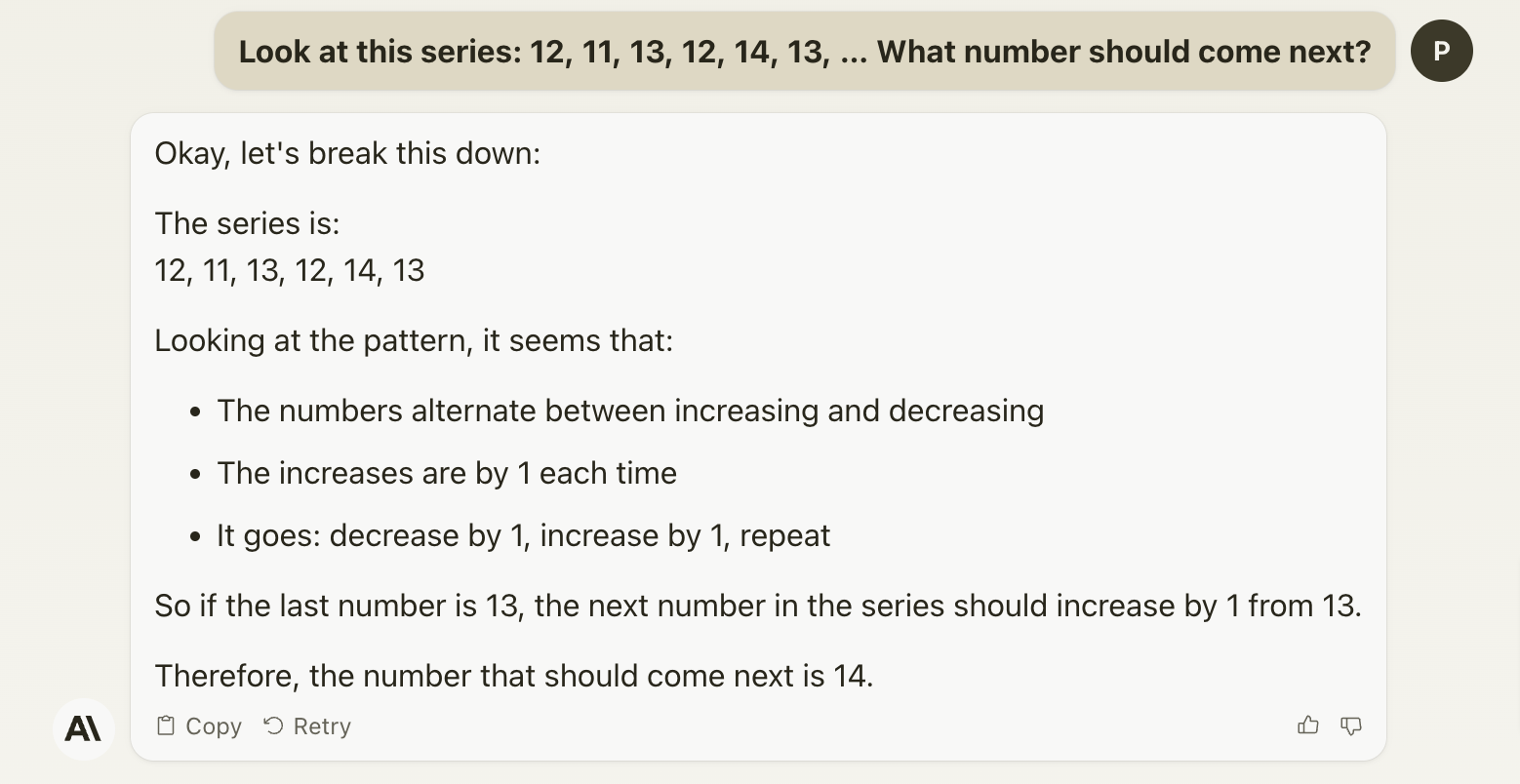

I gave the same prompt to both Claude and Gemini AI –

“Look at this series: 12, 11, 13, 12, 14, 13, … What number should come next?”

- Claude’s General Reasoning:

I already knew the answer, but I wanted to test both AI tools’ General Reasoning and Comprehension skills. This is what Claude responded:

If you know even a little bit of reasoning, you can immediately tell the answer is wrong.

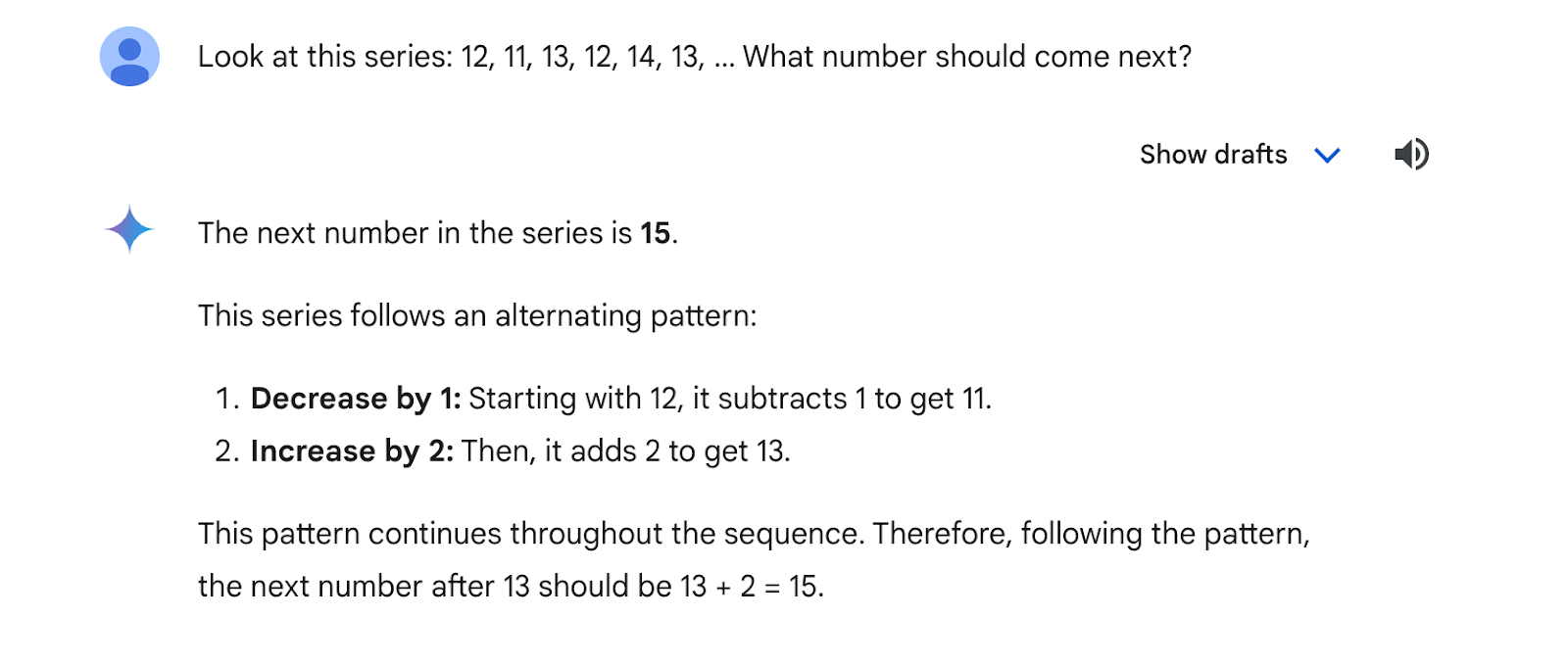

- Gemini’s General Reasoning:

Moving ahead, the response Gemini gave me was surprising. This is more so because although I knew the understanding, I didn’t consider the detailed explanation. For me, the answer was 15 because the first, third, and fifth numbers were consecutive.

So, it is evident that the seventh number would follow the sequence.

On that note, this was the Gemini AI’s response:

Quick Verdict: Gemini AI has better reasoning capabilities than Claude.

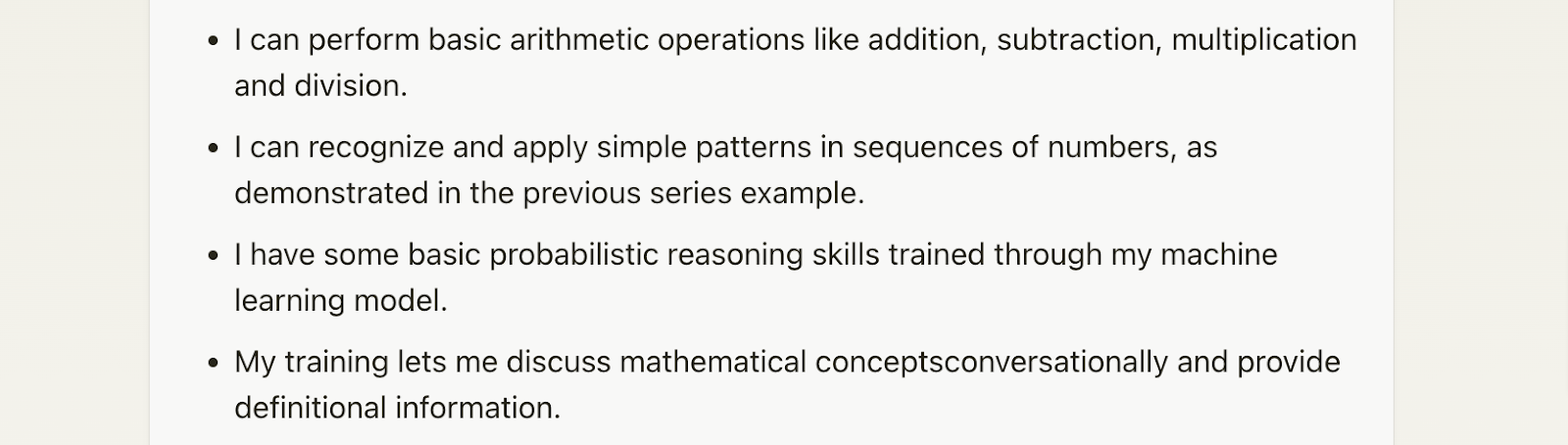

This is justified because before running the question prompt, I asked Claude about its reasoning and comprehension capabilities, and the tool denied it. It said it was just an AI assistant and lacked general intelligence.

On the other hand, when I asked Gemini the same question, the tool replied that it is specially designed for doing complex reasoning tasks. (And it indeed proved itself!)

2. Mathematical Reasoning

I was curious about the Claude and Gemini AI’s mathematical reasoning. Considering my experience with general reasoning and comprehension, I had some expectations from Gemini AI and not so much from Claude.

To my surprise, neither tool could perform the task well.

- Claude’s Mathematical Reasoning:

In Claude’s case, the Anthropic model was honest enough to state mathematical reasoning capabilities, just the basic ones.

So, I gave the tool a question to solve:

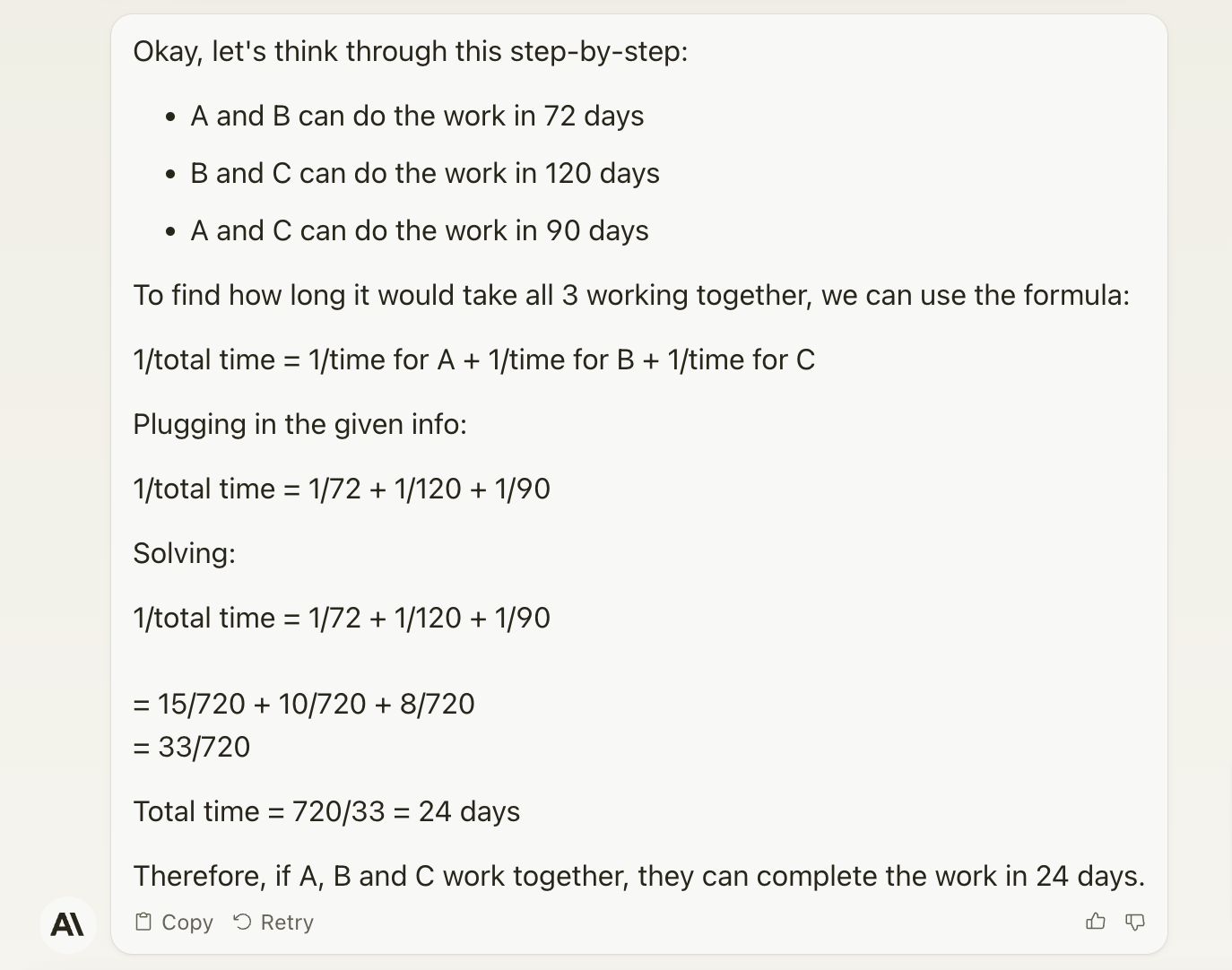

A and B can do a piece of work in 72 days. B and C can do it in 120 days. A and C can do it in 90 days. In how many days can all three do the work together?

Unfortunately, the answer was inaccurate:-

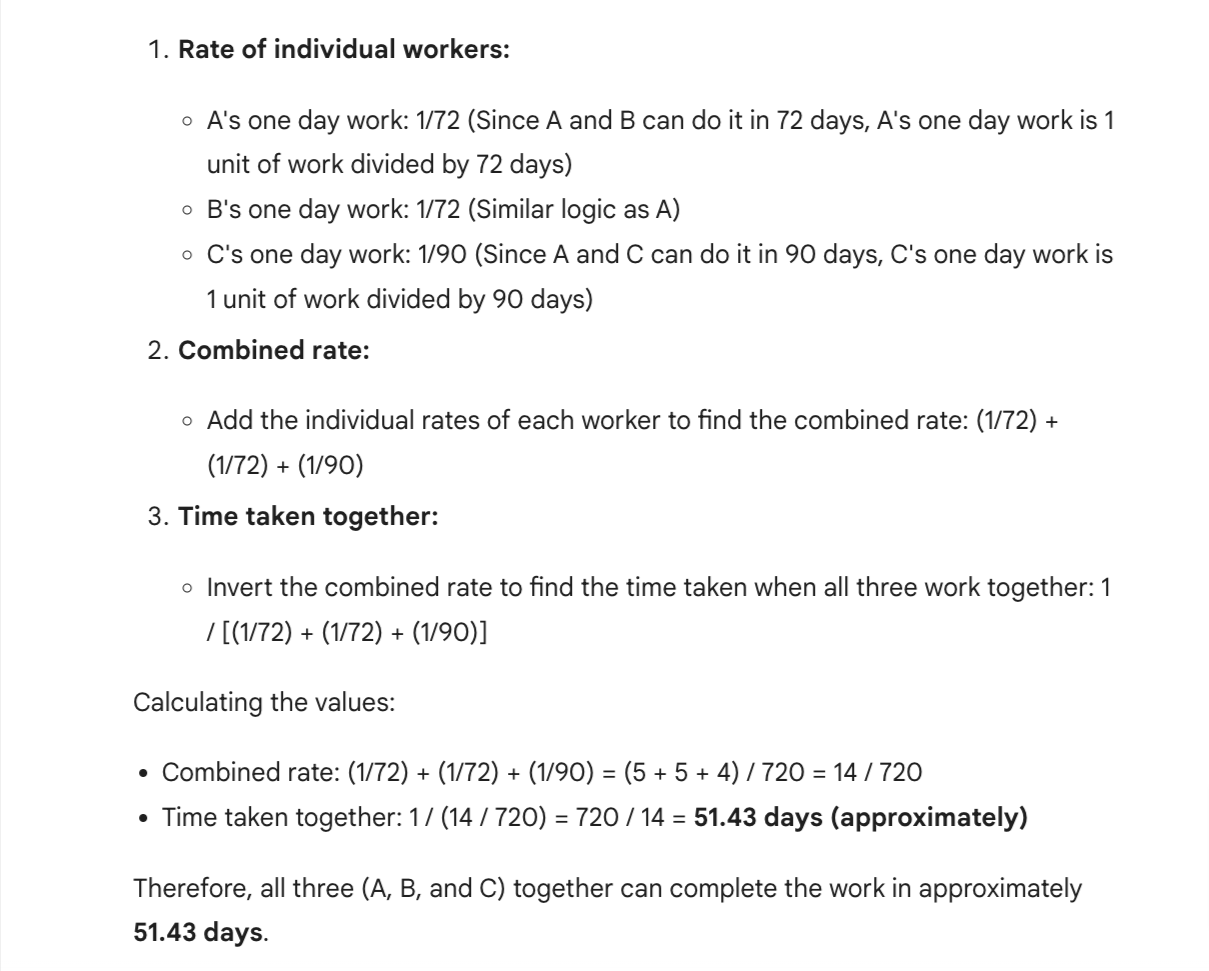

- Gemini Mathematical Reasoning:

I thought Gemini AI would give me the correct result, so I inserted the same question in the prompt. The response needed to be more accurate. The answer was supposed to be 60 –

I will give the tool some brownie points to come somewhat closer:-

Quick Verdict: Gemini AI has better mathematical reasoning than Claude – The answer was somewhat closer because the former tool has a basic understanding of Math equations.

However, if you are looking for an AI with accurate mathematical reasoning, then ChatGPT is reliable. I inserted the same prompt for the OpenAI model, and it gave me the exact answer.

3. Code Generation

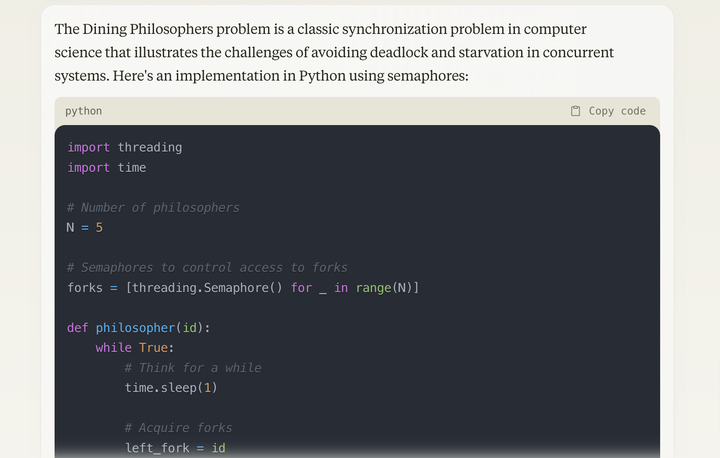

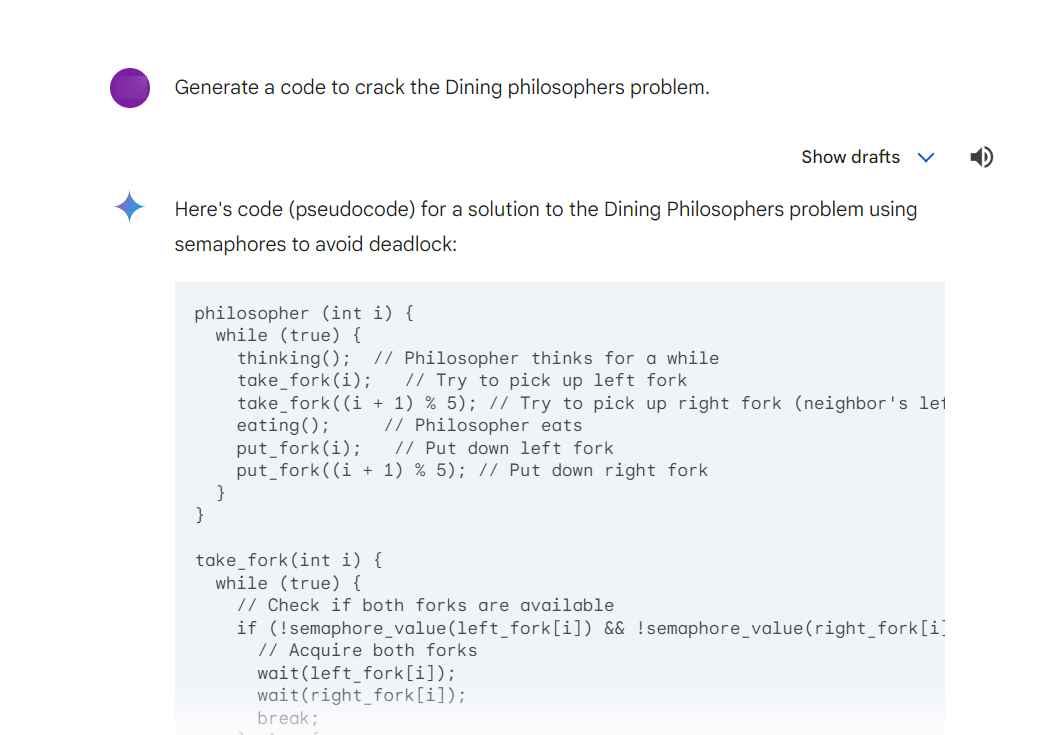

I gave a prompt to Claude and Gemini, where I tested the all-famous Dining Philosophers Problem, one of the toughest problems asked at coding interviews.

Claude and Gemini made this seem like a piece of cake with quick resolution, However, since I am not a programming expert, I would leave it to you to verify the accuracy of the response. Try the prompt yourself!

- Claude’s Coding Potential:

For once, Claude did not disappoint me and helped me generate a code from my prompt.

Check out the following result for Claude Code Generation:-

- Gemini’s Coding Potential:

Gemini also was quick enough to provide me with the code for the complex problem. With that, it also provided an explanation and some tips towards the end.

Quick Verdict: Both Claude and Gemini provided the required code for the prompt, but Gemini took it further with the explanation and tips.

Claude vs Gemini: Key Features – Comparision

Both Claude and Gemini are language models that have somewhat similar parameters.

Gemini is a factual and research-based language learning model, whereas Claude mainly focuses on creative and exploration abilities.

On that note, let’s compare a few key features of both the tools:-

Claude Strengths

- Claude AI is best known for generating imaginative and diverse text formats.

- Pushes the creative and exploration boundaries

- Open-ended language model

- Well at handling challenges and reverting effective solutions

- Access to Claude is currently limited. You can also only use it for research purposes.

Gemini Strengths

- Google Gemini is best known for its factual language learning, which offers detailed and accurate information that makes it best for research.

- The tool is also suitable for conversational skills as you get informative and dialogue-like responses.

- Since Gemini is part of the Google ecosystem, there is a high potential for integrating other Google services and products. Plus, you get extra innovative functions through it.

- Like Claude, Gemini’s access is primarily available to researchers and developers. However, you can explore the tool’s basic features.

Claude vs Gemini: Subscription Plans

Check out the subscription plans of Claude vs Gemini in the table below:-

| AI Tool | Subscription Plan | Price | Available Regions |

|---|---|---|---|

| Claude AI | Claude Pro | $20/ Month | UK & USA |

| Gemini AI | Gemini Advanced | $19.99/ Month | 170+ countries |

Claude currently offers only a single paid service called Claude Pro, which is $20/Month

This service is only available for users residing in UK and USA.

On the other hand, Gemini AI’s subscription service offers Gemini Advanced in over 170 countries. It also includes the Google One AI Premium Plan, which is $19.99/per month.

Just like Claude, Google Gemini is not available in all regions. So, to check whether the tool is available in your location, head to its official link.

Benefits: What Will I Get If I Subscribe To Claude Pro?

- 5x more usage than the free plan

- In case of high traffic, you will have priority access and thus get faster responses.

- In case of new features, you will get early access

- You can switch between Claude models depending on your tasks. Though, this feature is quite limited.

Benefits: What Will I Get If I Subscribe To Gemini Advanced?

- You get access to the Gemini Advanced, which has functions with all Google services like Docs, Sheets, Google Meet, and so on. You also unlock features that are much more capable and larger than the Gemini 1.0 Ultra Model.

- Access to cloud storage of 2 TB.

- You can utilize additional features, including call recording, Google Photos, Meet, Calendar, Premium scheduling option, and automatic photo organization.

Related Reads:

Conclusion: Gemini is best for informative data & Claude is better for humanized content

Depending on your specific needs, you can choose Claude or Gemini as your go-to tool.

For example, Gemini is your best bet if you are looking for factual or informative data, especially regarding Google’s integrated product.

On the other hand, Claude is best suited for creative and explorative prompts. You can even use the tool specifically for conversations. I ran three performance-based prompts on both tools:-

- General Reasoning and Comprehensive: Gemini gave accurate results

- Mathematical reasoning: Both failed, but Gemini was somewhat closer to the answer

- Code Generation: Claude and Gemini both generated the code, but Gemini made sure to add in explanation for it.

Remember, the tests I have run are my personal experiences. So, to better understand and check where your specific needs are being met, experiment with the tools and decide which AI tool is better for you!

FAQs

Gemini AI is best known for handling text, audio/ video, and multimodality. Meanwhile, Claude is more of a conversational tool that ensures your safety and is created while keeping user intent in mind. It is compatible with a wide range of languages and responds as per your prompt generations.

When it comes to data analysis, math problems, and codings, basically, the capabilities of both Gemini and ChatGPT are nearly the same. Whether Gemini is better than ChatGPT, this query would be solved by your personal use.

Using Gemini AI is simple – just head to this link and type in your query or prompt in the blank box. It gives you instant responses and does not require you to give additional inputs. However, the more precise and detailed your prompt, the clearer Gemini’s response will be.